Computational complexity theory: Difference between revisions

imported>Alexander Wiebel (→External links: moved to subpage) |

Pat Palmer (talk | contribs) (WP Attribution) |

||

| (11 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | {{subpages}} | ||

{{TOC|right}} | |||

'''Computational complexity theory''' is a branch of the [[theory of computation]], in [[computer science]], that describes the [[scalability]] of [[algorithm]]s, and the inherent difficulty in providing scalable algorithms for specific [[computational problem]]s. That is, the theory answers the question, "As the size of the input to an algorithm increases, how | '''Computational complexity theory''' is a branch of the [[theory of computation]], in [[computer science]], that describes the [[scalability]] of [[algorithm]]s, and the inherent difficulty in providing scalable algorithms for specific [[computational problem]]s. That is, the theory answers the question, "As the size of the input to an algorithm increases, how do the running time and memory requirements of the algorithm change?" Computational complexity theory deals with algorithms that are [[computable function|computable]] - this differs from [[computability theory (computation)|computability theory]], which deals with whether a problem can be solved at all, regardless of the resources required. | ||

The implications of the theory are important in many other fields. The speed and memory capacity of computers are always increasing, but then so are the sizes of the data sets that need to be analyzed. If algorithms fail to scale well, then even vast improvements in computing technology will result in only marginal increases in practical data size. | The implications of the theory are important in many other fields. The speed and memory capacity of computers are always increasing, but then so are the sizes of the data sets that need to be analyzed. If algorithms fail to scale well, then even vast improvements in computing technology will result in only marginal increases in practical data size. | ||

| Line 32: | Line 33: | ||

Complexity theory analyzes the difficulty of computational problems in terms of many different [[computational resource]]s. The same problem can be explained in terms of the necessary amounts of many different computational resources, including time, space, randomness, [[alternation]], and other less-intuitive measures. A [[complexity class]] is the set of all of the computational problems which can be solved using a certain amount of a certain computational resource. | Complexity theory analyzes the difficulty of computational problems in terms of many different [[computational resource]]s. The same problem can be explained in terms of the necessary amounts of many different computational resources, including time, space, randomness, [[alternation]], and other less-intuitive measures. A [[complexity class]] is the set of all of the computational problems which can be solved using a certain amount of a certain computational resource. | ||

Perhaps the most well-studied computational resources are | Perhaps the most well-studied computational resources are [[deterministic time]] (DTIME) and [[deterministic space]] (DSPACE). These resources represent the amount of ''computation time'' and ''memory space'' needed on a deterministic computer, like the computers that actually exist. These resources are of great practical interest, and are well-studied. | ||

Some computational problems are easier to analyze in terms of more unusual resources. For example, a [[nondeterministic Turing machine]] is a computational model that is allowed to branch out to check many different possibilities at once. The nondeterministic Turing machine has very little to do with how we physically want to compute algorithms, but its branching exactly captures many of the mathematical models we want to analyze, so that [[nondeterministic time]] is a very important resource in analyzing computational problems. | Some computational problems are easier to analyze in terms of more unusual resources. For example, a [[nondeterministic Turing machine]] is a computational model that is allowed to branch out to check many different possibilities at once. The nondeterministic Turing machine has very little to do with how we physically want to compute algorithms, but its branching exactly captures many of the mathematical models we want to analyze, so that [[nondeterministic time]] is a very important resource in analyzing computational problems. | ||

| Line 52: | Line 53: | ||

{{see also|Complexity classes P and NP|Oracle machine|NP complexity class}} | {{see also|Complexity classes P and NP|Oracle machine|NP complexity class}} | ||

The question of whether ''NP'' is the same set as ''P'' (that is whether problems that can be solved in ''non-deterministic'' polynomial time can be solved in ''deterministic'' polynomial time) is one of the most important open questions in theoretical computer science due to the wide implications a solution would present.<ref name="Sipser2006"/>. One negative impact is that many forms of [[cryptography]] would become easy to "[[security cracking|crack]]" and would therefore be useless. However, there are also enormous positive consequences that would follow, as many important problems would be shown to have efficient solutions. These include various types of [[integer programming]] in [[operations research]], many problems in [[logistics]], [[protein structure prediction]] in [[biology]], and the ability to find formal proofs of [[pure mathematics]] theorems efficiently using [[computer]]s.<ref>{{cite journal|title=Protein folding in the hydrophobic-hydrophilic (HP) model is NP-complete.|last=Berger|first=Bonnie A.|coauthors=Leighton, Terrance|journal=Journal of Computational Biology|year=1998|volume=5|number=1|pages=p27-40|id=<!--{{PMID|9541869}}-->}}</ref><ref>{{cite journal|last=Cook|first=Stephen|authorlink=Stephen Cook|title=The P versus NP Problem|publisher=[[Clay Mathematics Institute]]|year=2000|month=April|url=http://www.claymath.org/millennium/P_vs_NP/Official_Problem_Description.pdf|accessdate=2006-10-18}}</ref> The [[Clay Mathematics Institute]] announced in the year | The question of whether ''NP'' is the same set as ''P'' (that is whether problems that can be solved in ''non-deterministic'' polynomial time can be solved in ''deterministic'' polynomial time) is one of the most important open questions in theoretical computer science due to the wide implications a solution would present.<ref name="Sipser2006"/>. One negative impact is that many forms of [[cryptography]] would become easy to "[[security cracking|crack]]" and would therefore be useless. However, there are also enormous positive consequences that would follow, as many important problems would be shown to have efficient solutions. These include various types of [[integer programming]] in [[operations research]], many problems in [[logistics]], [[protein structure prediction]] in [[biology]], and the ability to find formal proofs of [[pure mathematics]] theorems efficiently using [[computer]]s.<ref>{{cite journal|title=Protein folding in the hydrophobic-hydrophilic (HP) model is NP-complete.|last=Berger|first=Bonnie A.|coauthors=Leighton, Terrance|journal=Journal of Computational Biology|year=1998|volume=5|number=1|pages=p27-40|id=<!--{{PMID|9541869}}-->}}</ref><ref>{{cite journal|last=Cook|first=Stephen|authorlink=Stephen Cook|title=The P versus NP Problem|publisher=[[Clay Mathematics Institute]]|year=2000|month=April|url=http://www.claymath.org/millennium/P_vs_NP/Official_Problem_Description.pdf|accessdate=2006-10-18}}</ref> The [[Clay Mathematics Institute]] announced in the year 2000 that they would pay a [[United States dollar|USD$]]1,000,000 prize for the first person to prove a solution.<ref>{{cite journal|title=The Millennium Grand Challenge in Mathematics|last=Jaffe|first=Arthur M.|authorlink=Arthur Jaffe|journal=Notices of the AMS|volume=53|issue=6|url=http://www.ams.org/notices/200606/fea-jaffe.pdf|accessdate=2006-10-18}}</ref> | ||

Questions like this motivate the concepts of ''hard'' and ''[[complete (complexity)|complete]]''. A set of problems ''X'' is hard for a set of problems ''Y'' if every problem in ''Y'' can be transformed "easily" into some problem in ''X'' that produces the same solution. The definition of "easily" is different in different contexts. An important ''hard'' set in complexity theory is [[NP-hard]] - a set of problems, that are not necessarily in ''NP'' themselves, to which any ''NP'' problem can be reduced in polynomial time. | Questions like this motivate the concepts of ''hard'' and ''[[complete (complexity)|complete]]''. A set of problems ''X'' is hard for a set of problems ''Y'' if every problem in ''Y'' can be transformed "easily" into some problem in ''X'' that produces the same solution. The definition of "easily" is different in different contexts. An important ''hard'' set in complexity theory is [[NP-hard]] - a set of problems, that are not necessarily in ''NP'' themselves, to which any ''NP'' problem can be reduced in polynomial time. | ||

| Line 64: | Line 65: | ||

===''NP''<nowiki> = </nowiki>''co-NP''=== | ===''NP''<nowiki> = </nowiki>''co-NP''=== | ||

Where [[co-NP]] is the set containing the [[Complement (complexity)|complement]] problems (i.e. problems with the ''yes''/''no'' answers reversed) of NP problems. It is believed that the two classes are not equal, however it has not yet been proven. It has been shown that if these two complexity classes are not equal, then it follows that no NP-complete problem can be in co-NP and no [[co-NP-complete]] problem can be in NP.<ref name="DuKo2000"/> | Where [[co-NP]] is the set containing the [[Complement (complexity)|complement]] problems (i.e. problems with the ''yes''/''no'' answers reversed) of NP problems. It is believed that the two classes are not equal, however it has not yet been proven. It has been shown that if these two complexity classes are not equal, then it follows that no NP-complete problem can be in co-NP and no [[co-NP-complete]] problem can be in NP.<ref name="DuKo2000"/> | ||

It should be noted that the P class of problems has been proven to be closed under complement; that is, P = co-P. Therefore, if P = NP another significant result would be the fact that NP = co-NP. | |||

==Intractability== | ==Intractability== | ||

Problems that are [[decidability|solvable in theory]] in <i>finite</i> time, but cannot be solved in practice in a <i>reasonable</i> amount of time, are called ''intractable''. What can be solved "in practice" is open to debate, but in general only problems that have polynomial-time solutions are solvable for more than the smallest inputs. Problems that are known to be intractable include those that are [[EXPTIME]]-complete. If NP is not the same as P, then the NP-complete problems are also intractable. | Problems that are [[decidability|solvable in theory]] in <i>finite</i> time, but cannot be solved in practice in a <i>reasonable</i> amount of time, are called ''intractable''. What can be solved "in practice" is open to debate, but in general only problems that have polynomial-time solutions are solvable for more than the smallest inputs. Problems that are known to be intractable include those that are [[EXPTIME]]-complete. If, as most believe, NP is not the same as P, then the NP-complete problems are also intractable. In practice, NP-complete and NP-hard problems are considered intractable and [[approximation algorithm]]s are generally used. | ||

To see why exponential-time solutions are not usable in practice, consider a problem that requires 2<sup>n</sup> operations to solve (n is the size of the input). For a relatively small input size of n=100, and assuming a computer that can perform 10<sup>10</sup> (10 [[giga]]) operations per second, a solution would take about 4*10<sup>12</sup> years, much longer than the current [[age of the universe]]. | To see why exponential-time solutions are not usable in practice, consider a problem that requires 2<sup>n</sup> operations to solve (n is the size of the input). For a relatively small input size of n=100, and assuming a computer that can perform 10<sup>10</sup> (10 [[giga]]) operations per second, a solution would take about 4*10<sup>12</sup> years, much longer than the current [[universe|age of the universe]]. | ||

== | ==Attribution== | ||

{{WPAttribution}} | |||

== | ==Footnotes== | ||

<small> | |||

<references> | |||

</references> | |||

</small> | |||

OTHER: | |||

<small> | |||

* L. Fortnow, Steve Homer (2002/2003). [http://people.cs.uchicago.edu/~fortnow/papers/history.pdf A Short History of Computational Complexity]. In D. van Dalen, J. Dawson, and A. Kanamori, editors, ''The History of Mathematical Logic''. North-Holland, Amsterdam. | * L. Fortnow, Steve Homer (2002/2003). [http://people.cs.uchicago.edu/~fortnow/papers/history.pdf A Short History of Computational Complexity]. In D. van Dalen, J. Dawson, and A. Kanamori, editors, ''The History of Mathematical Logic''. North-Holland, Amsterdam. | ||

* [[Jan van Leeuwen]], ed. ''Handbook of Theoretical Computer Science, Volume A: Algorithms and Complexity'', The MIT Press/Elsevier, 1990. ISBN 0-444-88071-2 (Volume A). QA 76.H279 1990. Huge compendium of information, 1000s of references in the various articles. | |||

</small> | |||

[[Category:Suggestion Bot Tag]] | |||

Latest revision as of 09:12, 6 October 2024

Computational complexity theory is a branch of the theory of computation, in computer science, that describes the scalability of algorithms, and the inherent difficulty in providing scalable algorithms for specific computational problems. That is, the theory answers the question, "As the size of the input to an algorithm increases, how do the running time and memory requirements of the algorithm change?" Computational complexity theory deals with algorithms that are computable - this differs from computability theory, which deals with whether a problem can be solved at all, regardless of the resources required.

The implications of the theory are important in many other fields. The speed and memory capacity of computers are always increasing, but then so are the sizes of the data sets that need to be analyzed. If algorithms fail to scale well, then even vast improvements in computing technology will result in only marginal increases in practical data size.

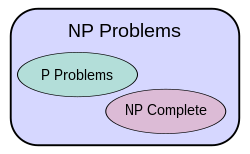

Algorithms and problems are categorized into complexity classes. The most important open question of complexity theory is whether the complexity class P is the same as the complexity class NP, or is merely a subset as is generally believed. Far from being an esoteric exercise, shortly after the question was first posed, it was realized that many important industry problems in the field of operations research are of a NP subclass called NP-complete. NP-complete problems have the property that solutions to problems are quick to check, yet current methods to find exact solutions are not scalable. If the NP class is larger than P, then no easily scalable solution exists for these problems; whether this is the case remains the most important open question in computational complexity theory.

Overview

A single "problem" is a complete set of related questions, where each question is a finite-length string. For example, the problem FACTORIZE is: given an integer written in binary, return all of the prime factors of that number. A particular question is called an instance. For example, "give the factors of the number 15" is one instance of the FACTORIZE problem.

The time complexity of a problem is the number of steps that it takes to solve an instance of the problem as a function of the size of the input (usually measured in bits), using the most efficient algorithm. To understand this intuitively, consider the example of an instance that is n bits long that can be solved in n² steps. In this example we say the problem has a time complexity of n². Of course, the exact number of steps will depend on exactly what machine or language is being used. To avoid that problem, the Big O notation is generally used. If a problem has time complexity O(n²) on one typical computer, then it will also have complexity O(n²) on most other computers, so this notation allows us to generalize away from the details of a particular computer. See Complexity of algorithms for further information on time complexity.

Example: Mowing grass has linear time complexity because it takes double the time to mow double the area. However, looking up something in a dictionary has only logarithmic time complexity because a double sized dictionary only has to be opened one time more (e.g. exactly in the middle - then the problem size is reduced by half).

The space complexity of a problem is a related concept, that measures the amount of space, or memory required by the algorithm. An informal analogy would be the amount of scratch paper needed while working out a problem with pen and paper. Space complexity is also measured with Big O notation.

A different measure of problem complexity, which is useful in some cases, is circuit complexity. This is a measure of the size of a boolean circuit needed to compute the answer to a problem, in terms of the number of logic gates required to build the circuit. Such a measure is useful, for example, when designing hardware microchips to compute the function instead of software.

Decision problems

Much of complexity theory deals with decision problems. A decision problem is a problem where the answer is always yes or no. Complexity theory distinguishes between problems verifying yes and problems verifying no answers. A problem that reverse the yes and no answers of another problem is called a complement of that problem.

For example, a well known decision problem IS-PRIME returns a yes answer when a given input is a prime and a no otherwise, while the problem IS-COMPOSITE determines whether a given integer is not a prime number (i.e. a composite number). When IS-PRIME returns a yes, IS-COMPOSITE returns a no, and vice versa. So the IS-COMPOSITE is a complement of IS-PRIME, and similarly IS-PRIME is a complement of IS-COMPOSITE.

Decision problems are often considered because an arbitrary problem can always be reduced to some decision problem. For instance, the problem HAS-FACTOR is: given integers n and k written in binary, return whether n has any prime factors less than k. If we can solve HAS-FACTOR with a certain amount of resources, then we can use that solution to solve FACTORIZE without much more resources. This is accomplished by doing a binary search on k until the smallest factor of n is found, then dividing out that factor and repeating until all the factors are found.

An important result in complexity theory is the fact that no matter how hard a problem can get (i.e. how much time and space resources it requires), there will always be even harder problems. For time complexity, this is proven by the time hierarchy theorem. A similar space hierarchy theorem can also be derived.

Computational resources

Complexity theory analyzes the difficulty of computational problems in terms of many different computational resources. The same problem can be explained in terms of the necessary amounts of many different computational resources, including time, space, randomness, alternation, and other less-intuitive measures. A complexity class is the set of all of the computational problems which can be solved using a certain amount of a certain computational resource.

Perhaps the most well-studied computational resources are deterministic time (DTIME) and deterministic space (DSPACE). These resources represent the amount of computation time and memory space needed on a deterministic computer, like the computers that actually exist. These resources are of great practical interest, and are well-studied.

Some computational problems are easier to analyze in terms of more unusual resources. For example, a nondeterministic Turing machine is a computational model that is allowed to branch out to check many different possibilities at once. The nondeterministic Turing machine has very little to do with how we physically want to compute algorithms, but its branching exactly captures many of the mathematical models we want to analyze, so that nondeterministic time is a very important resource in analyzing computational problems.

Many more unusual computational resources have been used in complexity theory. Technically, any complexity measure can be viewed as a computational resource, and complexity measures are very broadly defined by the Blum complexity axioms.

Complexity classes

A complexity class is the set of all of the computational problems which can be solved using a certain amount of a certain computational resource.

The complexity class P is the set of decision problems that can be solved by a deterministic machine in polynomial time. This class corresponds to an intuitive idea of the problems which can be effectively solved in the worst cases. [1]

The complexity class NP is the set of decision problems that can be solved by a non-deterministic machine in polynomial time. This class contains many problems that people would like to be able to solve effectively, including the Boolean satisfiability problem, the Hamiltonian path problem and the Vertex cover problem. All the problems in this class have the property that their solutions can be checked effectively.[1]

Many complexity classes can be characterized in terms of the mathematical logic needed to express them - this field is called descriptive complexity.

Open questions

The P = NP question

- See also: Complexity classes P and NP, Oracle machine, and NP complexity class

The question of whether NP is the same set as P (that is whether problems that can be solved in non-deterministic polynomial time can be solved in deterministic polynomial time) is one of the most important open questions in theoretical computer science due to the wide implications a solution would present.[1]. One negative impact is that many forms of cryptography would become easy to "crack" and would therefore be useless. However, there are also enormous positive consequences that would follow, as many important problems would be shown to have efficient solutions. These include various types of integer programming in operations research, many problems in logistics, protein structure prediction in biology, and the ability to find formal proofs of pure mathematics theorems efficiently using computers.[2][3] The Clay Mathematics Institute announced in the year 2000 that they would pay a USD$1,000,000 prize for the first person to prove a solution.[4]

Questions like this motivate the concepts of hard and complete. A set of problems X is hard for a set of problems Y if every problem in Y can be transformed "easily" into some problem in X that produces the same solution. The definition of "easily" is different in different contexts. An important hard set in complexity theory is NP-hard - a set of problems, that are not necessarily in NP themselves, to which any NP problem can be reduced in polynomial time.

Set X is complete for Y if it is hard for Y, and is also a subset of Y. An important complete set in complexity theory is the NP-complete set. This set contains the most "difficult" problems in NP, in the sense that they are the ones most likely not to be in P. Due to the fact that the problem of P = NP remains unsolved, being able to reduce a problem to a known NP-complete problem would indicate that there is no known polynomial-time solution for it. Similarly, because all NP problems can be reduced to the set, finding an NP-complete problem that can be solved in polynomial time would mean that P = NP.[1]

Incomplete problems in NP

Another open question related to the P = NP problem is whether there exist problems that are in NP, but not in P, that are not NP-complete. In other words problems that have to be solved in non-deterministic polynomial time, but cannot be reduced to in polynomial time from other non-deterministic polynomial time problems. One such problem, that is known to be NP but not known to be NP-complete, is the graph isomorphism problem - a problem that tries to decide whether two graphs are isomorphic (i.e. share the same properties). It has been shown that if P ≠ NP then such problems exist.[6]

NP = co-NP

Where co-NP is the set containing the complement problems (i.e. problems with the yes/no answers reversed) of NP problems. It is believed that the two classes are not equal, however it has not yet been proven. It has been shown that if these two complexity classes are not equal, then it follows that no NP-complete problem can be in co-NP and no co-NP-complete problem can be in NP.[6]

It should be noted that the P class of problems has been proven to be closed under complement; that is, P = co-P. Therefore, if P = NP another significant result would be the fact that NP = co-NP.

Intractability

Problems that are solvable in theory in finite time, but cannot be solved in practice in a reasonable amount of time, are called intractable. What can be solved "in practice" is open to debate, but in general only problems that have polynomial-time solutions are solvable for more than the smallest inputs. Problems that are known to be intractable include those that are EXPTIME-complete. If, as most believe, NP is not the same as P, then the NP-complete problems are also intractable. In practice, NP-complete and NP-hard problems are considered intractable and approximation algorithms are generally used.

To see why exponential-time solutions are not usable in practice, consider a problem that requires 2n operations to solve (n is the size of the input). For a relatively small input size of n=100, and assuming a computer that can perform 1010 (10 giga) operations per second, a solution would take about 4*1012 years, much longer than the current age of the universe.

Attribution

- Some content on this page may previously have appeared on Wikipedia.

Footnotes

- ↑ 1.0 1.1 1.2 1.3 Sipser, Michael (2006). “Time Complexity”, Introduction to the Theory of Computation, 2nd edition. USA: Thomson Course Technology. ISBN 053495097 (?).

- ↑ Berger, Bonnie A.; Leighton, Terrance (1998). "Protein folding in the hydrophobic-hydrophilic (HP) model is NP-complete.". Journal of Computational Biology 5: p27-40.

- ↑ Cook, Stephen (April 2000). "The P versus NP Problem". Retrieved on 2006-10-18.

- ↑ Jaffe, Arthur M.. "The Millennium Grand Challenge in Mathematics". Notices of the AMS 53 (6). Retrieved on 2006-10-18.

- ↑ Ladner, Richard E. (1975). "On the structure of polynomial time reducibility" (PDF). Journal of the ACM (JACM) 22: 151–171. DOI:10.1145/321864.321877. Research Blogging.

- ↑ 6.0 6.1 Du, Ding-Zhu; Ko, Ker-I (2000). Theory of Computational Complexity. John Wiley & Sons. ISBN 0-471-34506-7.

OTHER:

- L. Fortnow, Steve Homer (2002/2003). A Short History of Computational Complexity. In D. van Dalen, J. Dawson, and A. Kanamori, editors, The History of Mathematical Logic. North-Holland, Amsterdam.

- Jan van Leeuwen, ed. Handbook of Theoretical Computer Science, Volume A: Algorithms and Complexity, The MIT Press/Elsevier, 1990. ISBN 0-444-88071-2 (Volume A). QA 76.H279 1990. Huge compendium of information, 1000s of references in the various articles.